Even compared to fixing bugs in 25 year old DOS games, this is possibly the most useless thing I've done all year, yet to me also one of the most fun. During my time off for the holiday season, I set about making my most recent games run on two decade old hardware.

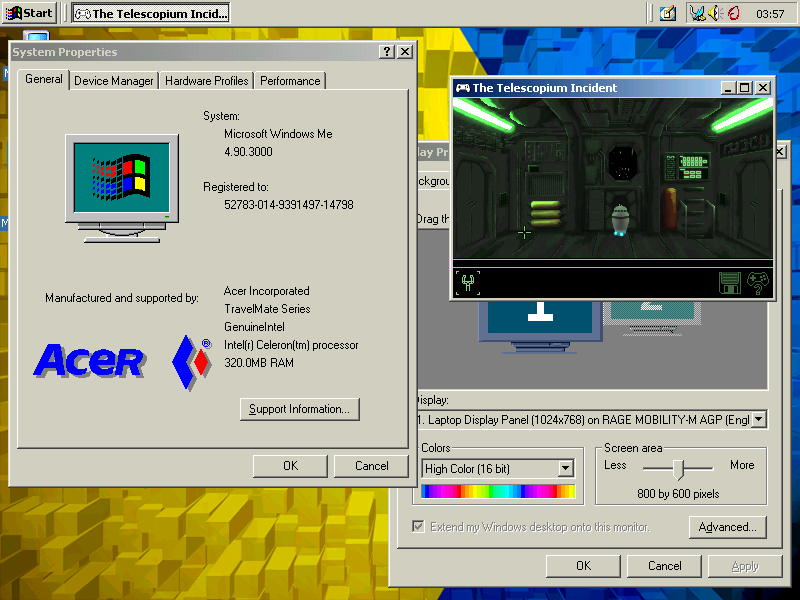

As I started development of my homegrown adventure game engine in the early 2000s, people were most commonly running Windows 98 Second edition or Windows Millennium Edition, with some actually opting for Windows XP's daddy Windows 2000 instead. In fact, the laptop I was developing it on is the very same I've been using for these tests.

This particular laptop is powered by a 550MHz Intel Celeron, with 320MB of RAM (expanded from the original 64), a whopping 5GB hard drive, and for this story the most important thing: an ATi Rage Mobility M graphics chipset running at 800x600. Even in its day, this wasn't a graphics powerhouse. But I figured that, as the engine started life on this laptop, it might be fun to see whether I could run the games I created with it recently on that now more than 20 year old piece of kit.

What had to change to run on Windows Me?

Simply, nothing. I initially wrote the engine using Microsoft Visual C++ 5 and 6 using plain C++ and the bare Win32 API. Although current versions are compiled with Microsoft Visual C++ 2005, I've not made significant use of any Win32 functionality more recent than what is provided in that old API, simply because I've not had a need for it. The engine has even been programmed against DirectX 8 and that was readily available on Windows 9x (even a DirectX 9 is available for it).

Although I don't have a Windows 98 (or even Windows 95) installation, as long as DirectX 8 or better is installed, and a graphics device/driver combination that supports the DirectX 8 API, it should work. And indeed, others have reported running the games working on a 233MHz Pentium MMX with an ATi Rage XL on Windows 98. Performance isn't great on those old machines, but it is definitely playable.

What had to change to run on the old ATi card?

Despite targeting the DirectX 8 API (which can be expected to work properly with DX6 class hardware and later) there were a few shortcuts that I took over the years, mostly by virtue of having access to much better hardware than that mobile ATi Rage chipset, as my main machine at that time sported a GeForce 2MX and eventually a GeForce 3.

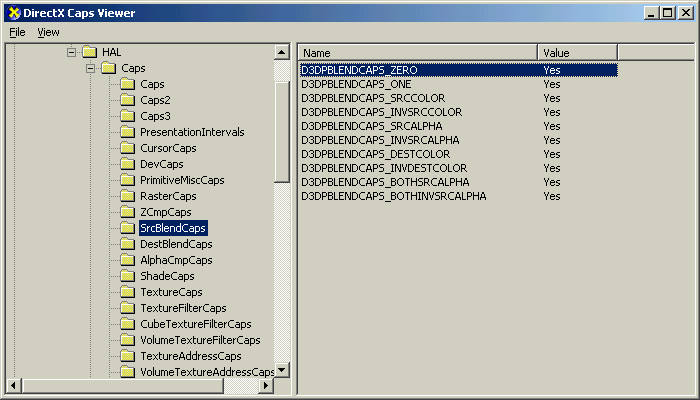

Whereas since DirectX 11 there are well-defined feature levels that specify what features a card must provide to be considered compliant with that version of the API, in the earlier versions it was all about the driver filling a large structure representing the capabilities of the hardware it could expose. Wanted to know whether cube maps were supported? Check D3DCAPS:

The DirectX Caps Viewer that came with the SDK provided a convenient way to check the capabilities in a human readable manner as shown above. When coding, you'd have to check these programmatically and adjust your programme's behaviour to match (or just refuse to run at all).

There's a few things that, while working properly on modern hardware (anything more recent than, say, 2003), just weren't necessarily supported on the graphics hardware from the late 1990s. And, since I hadn't bothered to check those capabilities, the games would crash or glitch when trying to make use of those. Fortunately, almost all of them were easily worked around.

No hardware (or mixed) vertex processing

With the introduction of the GeForce, touting "hardware transform and lighting" a significant part of the 3D pipeline could be accelerated with specialised hardware, offloading the vertex calculations from the CPU. When creating a Direct3D device, you can specify whether you want to have vertex processing take place in hardware on the GPU, in software on the CPU, or a mix of the two. The ATi card does not have support for hardware T&L, so I added fallbacks from hardware to mixed and even software-only vertex processing.

No colour write masking

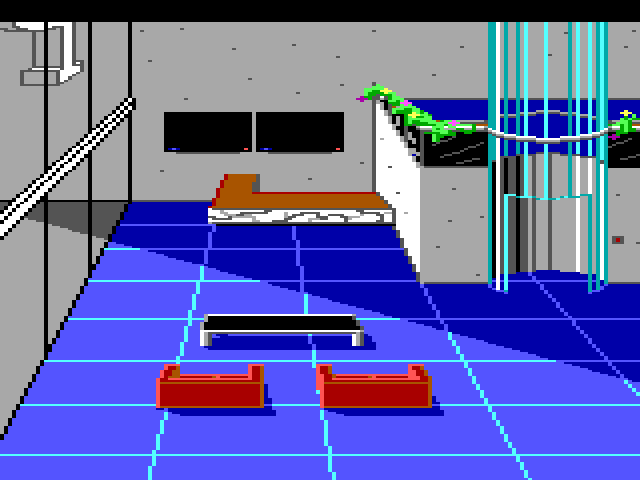

The backdrops in my adventure games consist conceptually of a visible 2D image, and 0 or more invisible layers of depth information. These depth layers are drawn into the graphics card's Z buffer as simple screen-sized quads with colour write disabled (so no visible pixels are drawn, only writes to the Z buffer are permitted).

Mouse over (or tap) the image above to see the layer structure.

The ATi card didn't have the D3DPMISCCAPS_COLORWRITEENABLE capability bit set, so it would overlay the depth information over the visible image. The solution would have been to either write the visible image after writing the depth buffer, with Z test disabled, or to fake disabling the colour writes. I picked the latter and chose to set the blend function to just retain what was already in the colour buffer and zero out the incoming data.

This would have been a lot easier if things like depth textures had existed in DirectX 8. As it stands, this is actually one of the slowest aspects of the game engine, as there is potentially a lot of overdraw in this step and this hardware is capable of rendering a maximum of 75 million pixels/texels per second.

No stencil buffer

The engine supports clipping the rendering of text and sprites and uses the stencil buffer to allow arbitrary clipping areas. Unfortunately the ATi card supports only 16-bit depth buffers and no stencil buffers at all.

In practice, this functionality is used only to clip to a single rectangle at any one time and this can also be achieved by simply adjusting the viewport, which is what I ended up doing.

Can't render to 32-bit textures

Although the card isn't powerful enough to run the games in 32-bit colour mode, it can do 16-bit colour mode. The thumbnails for save games are generated by rendering to a texture and I had initially hardcoded the engine to render to 32-bit textures. This failed on the ATi card, but allowing for 16-bit render targets worked like a charm.

Why bother?

Just because it was fun to do. I find there is something inherently cool about new games running on old systems. And as a side effect, I ended up improving performance of my engine in general, as well as fixing a few minor bugs.

Of course, the chances of anybody actually running these games seriously, rather than just testing, on hardware and software that old is basically nil. I just figured: if I can support these old systems with just a few lines of code without compromising the ability to run on modern systems, why wouldn't I?

Comments

No comments, yet...

Post a comment